提升方法

外观

| 机器学习与数据挖掘 |

|---|

|

提升方法(Boosting)是一种机器学习中的集成学习元启发算法,主要用来减小监督式学习中偏差并且也减小方差[1],以及一系列将弱学习器转换为强学习器的机器学习算法[2]。面对的问题是迈可·肯斯(Michael Kearns)和莱斯利·瓦利安特(Leslie Valiant)提出的:[3]一组“弱学习者”的集合能否生成一个“强学习者”?弱学习者一般是指一个分类器,它的结果只比随机分类好一点点;强学习者指分类器的结果非常接近真值。

Robert Schapire在1990年的一篇论文中[4]对肯斯和瓦利安特的问题的肯定回答在机器学习和统计方面产生了重大影响,最显着的是导致了提升方法的发展[5] 。

提升算法

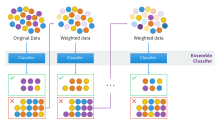

[编辑]大多数提升算法包括由迭代使用弱学习分类器组成,并将其结果加入一个最终的成强学习分类器。加入的过程中,通常根据它们的分类准确率给予不同的权重。加和弱学习者之后,数据通常会被重新加权,来强化对之前分类错误数据点的分类。

一个经典的提升算法例子是AdaBoost。一些最近的例子包括LPBoost、TotalBoost、BrownBoost、MadaBoost及LogitBoost。许多提升方法可以在AnyBoost框架下解释为在函数空间利用一个凸的误差函数作梯度下降。

批评

[编辑]2008年,谷歌的菲利普·隆(Phillip Long)与哥伦比亚大学的罗可·A·瑟维迪欧(Rocco A. Servedio)发表论文指出这些方法是有缺陷的:在训练集有错误的标记的情况下,一些提升算法虽会尝试提升这种样本点的正确率,但却无法产生一个正确率大于1/2的模型。[6]

相关条目

[编辑]实现

[编辑]- Orange, a free data mining software suite, module Orange.ensemble (页面存档备份,存于互联网档案馆)

- Weka is a machine learning set of tools that offers variate implementations of boosting algorithms like AdaBoost and LogitBoost

- R package GBM (页面存档备份,存于互联网档案馆) (Generalized Boosted Regression Models) implements extensions to Freund and Schapire's AdaBoost algorithm and Friedman's gradient boosting machine.

- jboost; AdaBoost, LogitBoost, RobustBoost, Boostexter and alternating decision trees

参考文献

[编辑]脚注

[编辑]- ^ Leo Breiman. BIAS, VARIANCE, AND ARCING CLASSIFIERS (PDF). TECHNICAL REPORT. 1996 [19 January 2015]. (原始内容 (PDF)存档于2015-01-19).

Arcing [Boosting] is more successful than bagging in variance reduction

- ^ Zhou Zhi-Hua. Ensemble Methods: Foundations and Algorithms. Chapman and Hall/CRC. 2012: 23. ISBN 978-1439830031.

The term boosting refers to a family of algorithms that are able to convert weak learners to strong learners

- ^ Michael Kearns (1988); Thoughts on Hypothesis Boosting (页面存档备份,存于互联网档案馆), Unpublished manuscript (Machine Learning class project, December 1988)

- ^ Schapire, Robert E. The Strength of Weak Learnability (PDF). Machine Learning. 1990, 5 (2): 197–227 [2012-08-23]. CiteSeerX 10.1.1.20.723

. S2CID 53304535. doi:10.1007/bf00116037. (原始内容 (PDF)存档于2012-10-10).

. S2CID 53304535. doi:10.1007/bf00116037. (原始内容 (PDF)存档于2012-10-10).

- ^ Leo Breiman. Arcing classifier (with discussion and a rejoinder by the author). Ann. Stat. 1998, 26 (3): 801–849. doi:10.1214/aos/1024691079

.

. Schapire (1990) proved that boosting is possible. (Page 823)

- ^ Philip M. Long, Rocco A. Servedio, "Random Classification Noise Defeats All Convex Potential Boosters" (PDF). [2014-04-17]. (原始内容存档 (PDF)于2021-01-18).

其他参考资料

[编辑]- Yoav Freund and Robert E. Schapire (1997); A Decision-Theoretic Generalization of On-line Learning and an Application to Boosting (页面存档备份,存于互联网档案馆), Journal of Computer and System Sciences, 55(1):119-139

- Robert E. Schapire and Yoram Singer (1999); Improved Boosting Algorithms Using Confidence-Rated Predictors (页面存档备份,存于互联网档案馆), Machine Learning, 37(3):297-336

外部链接

[编辑]- Robert E. Schapire (2003); The Boosting Approach to Machine Learning: An Overview (页面存档备份,存于互联网档案馆), MSRI (Mathematical Sciences Research Institute) Workshop on Nonlinear Estimation and Classification

- An up-to-date collection of papers on boosting