提升方法:修订间差异

删除的内容 添加的内容

JimGrassroot(留言 | 贡献) 无编辑摘要 |

JimGrassroot(留言 | 贡献) 无编辑摘要 |

||

| 第1行: | 第1行: | ||

{{机器学习导航栏}} |

{{机器学习导航栏}} |

||

'''提升方法'''(Boosting)是一种[[机器学习]]中的[[集成学习]][[元启发算法]],主要用来减小[[監督式學習]]中[[偏差]]并且也减小[[方差]]<ref>{{cite web|url=http://oz.berkeley.edu/~breiman/arcall96.pdf|archive-url=https://web.archive.org/web/20150119081741/http://oz.berkeley.edu/~breiman/arcall96.pdf|url-status=dead|archive-date=2015-01-19|title=BIAS, VARIANCE, AND ARCING CLASSIFIERS|last1=Leo Breiman|author-link=Leo Breiman|date=1996|publisher=TECHNICAL REPORT|quote=Arcing [Boosting] is more successful than bagging in variance reduction|access-date=19 January 2015}}</ref>,以及一系列将弱学习器转换为强学习器的机器学习算法<ref>{{cite book |last=Zhou Zhi-Hua |author-link=Zhou Zhihua |date=2012 |title=Ensemble Methods: Foundations and Algorithms |publisher= Chapman and Hall/CRC |page=23 |isbn=978-1439830031 |quote=The term boosting refers to a family of algorithms that are able to convert weak learners to strong learners }}</ref>。面對的问题是邁可·肯斯(Michael Kearns)提出的:<ref name="Kearns88">Michael Kearns (1988); [http://www.cis.upenn.edu/~mkearns/papers/boostnote.pdf ''Thoughts on Hypothesis Boosting''] {{Wayback|url=http://www.cis.upenn.edu/~mkearns/papers/boostnote.pdf |date=20190713142916 }}, Unpublished manuscript (Machine Learning class project, December 1988)</ref>一組“弱学习者”的集合能否生成一个“强学习者”?弱学习者一般是指一个分类器,它的结果只比随机分类好一点点;强学习者指分类器的结果非常接近真值。 |

'''提升方法'''(Boosting)是一种[[机器学习]]中的[[集成学习]][[元启发算法]],主要用来减小[[監督式學習]]中[[偏差]]并且也减小[[方差]]<ref>{{cite web|url=http://oz.berkeley.edu/~breiman/arcall96.pdf|archive-url=https://web.archive.org/web/20150119081741/http://oz.berkeley.edu/~breiman/arcall96.pdf|url-status=dead|archive-date=2015-01-19|title=BIAS, VARIANCE, AND ARCING CLASSIFIERS|last1=Leo Breiman|author-link=Leo Breiman|date=1996|publisher=TECHNICAL REPORT|quote=Arcing [Boosting] is more successful than bagging in variance reduction|access-date=19 January 2015}}</ref>,以及一系列将弱学习器转换为强学习器的机器学习算法<ref>{{cite book |last=Zhou Zhi-Hua |author-link=Zhou Zhihua |date=2012 |title=Ensemble Methods: Foundations and Algorithms |publisher= Chapman and Hall/CRC |page=23 |isbn=978-1439830031 |quote=The term boosting refers to a family of algorithms that are able to convert weak learners to strong learners }}</ref>。面對的问题是邁可·肯斯(Michael Kearns)和[[莱斯利·瓦利安特]](Leslie Valiant)提出的:<ref name="Kearns88">Michael Kearns (1988); [http://www.cis.upenn.edu/~mkearns/papers/boostnote.pdf ''Thoughts on Hypothesis Boosting''] {{Wayback|url=http://www.cis.upenn.edu/~mkearns/papers/boostnote.pdf |date=20190713142916 }}, Unpublished manuscript (Machine Learning class project, December 1988)</ref>一組“弱学习者”的集合能否生成一个“强学习者”?弱学习者一般是指一个分类器,它的结果只比随机分类好一点点;强学习者指分类器的结果非常接近真值。 |

||

Robert Schapire在1990年的一篇论文中<ref name="Schapire90">{{cite journal | first = Robert E. | last = Schapire | year = 1990 | citeseerx = 10.1.1.20.723 | url = http://www.cs.princeton.edu/~schapire/papers/strengthofweak.pdf | title = The Strength of Weak Learnability | journal = Machine Learning | volume = 5 | issue = 2 | pages = 197–227 | doi = 10.1007/bf00116037 | s2cid = 53304535 | access-date = 2012-08-23 | archive-url = https://web.archive.org/web/20121010030839/http://www.cs.princeton.edu/~schapire/papers/strengthofweak.pdf | archive-date = 2012-10-10 | url-status = dead }}</ref>对肯斯和Valiant问题<!--Please do not cite only one, because "Kearns and Valiant" is used as a convention to denote this question.-->的肯定回答在机器学习和统计方面产生了重大影响,最显着的是导致了[[提升方法]]的发展<ref>{{cite journal |last = Leo Breiman |author-link = Leo Breiman |date = 1998|title = Arcing classifier (with discussion and a rejoinder by the author)|journal = Ann. Stat.|volume = 26|issue = 3|pages = 801–849|doi = 10.1214/aos/1024691079|quote = Schapire (1990) proved that boosting is possible. (Page 823)|doi-access = free}}</ref> <!--{{citation needed|date=July 2014}} Could use secondary source to back up this claim. -->。 |

|||

==提升算法== |

==提升算法== |

||

2022年5月5日 (四) 11:57的版本

| 机器学习与数据挖掘 |

|---|

|

提升方法(Boosting)是一种机器学习中的集成学习元启发算法,主要用来减小監督式學習中偏差并且也减小方差[1],以及一系列将弱学习器转换为强学习器的机器学习算法[2]。面對的问题是邁可·肯斯(Michael Kearns)和莱斯利·瓦利安特(Leslie Valiant)提出的:[3]一組“弱学习者”的集合能否生成一个“强学习者”?弱学习者一般是指一个分类器,它的结果只比随机分类好一点点;强学习者指分类器的结果非常接近真值。

Robert Schapire在1990年的一篇论文中[4]对肯斯和Valiant问题的肯定回答在机器学习和统计方面产生了重大影响,最显着的是导致了提升方法的发展[5] 。

提升算法

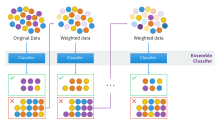

大多数提升算法包括由迭代使用弱学习分類器組成,並將其結果加入一個最終的成强学习分類器。加入的过程中,通常根据它们的分类准确率给予不同的权重。加和弱学习者之后,数据通常会被重新加权,来强化对之前分类错误数据点的分类。

一个经典的提升算法例子是AdaBoost。一些最近的例子包括LPBoost、TotalBoost、BrownBoost、MadaBoost及LogitBoost。许多提升方法可以在AnyBoost框架下解释为在函数空间利用一个凸的误差函数作梯度下降。

批评

2008年,谷歌的菲利普·隆(Phillip Long)與哥倫比亞大學的羅可·A·瑟維迪歐(Rocco A. Servedio)发表论文指出这些方法是有缺陷的:在训练集有错误的标记的情况下,一些提升算法雖會尝试提升这种样本点的正确率,但卻無法产生一个正确率大于1/2的模型。[6]

相關條目

实现

- Orange, a free data mining software suite, module Orange.ensemble (页面存档备份,存于互联网档案馆)

- Weka is a machine learning set of tools that offers variate implementations of boosting algorithms like AdaBoost and LogitBoost

- R package GBM (页面存档备份,存于互联网档案馆) (Generalized Boosted Regression Models) implements extensions to Freund and Schapire's AdaBoost algorithm and Friedman's gradient boosting machine.

- jboost; AdaBoost, LogitBoost, RobustBoost, Boostexter and alternating decision trees

参考文献

腳註

- ^ Leo Breiman. BIAS, VARIANCE, AND ARCING CLASSIFIERS (PDF). TECHNICAL REPORT. 1996 [19 January 2015]. (原始内容 (PDF)存档于2015-01-19).

Arcing [Boosting] is more successful than bagging in variance reduction

- ^ Zhou Zhi-Hua. Ensemble Methods: Foundations and Algorithms. Chapman and Hall/CRC. 2012: 23. ISBN 978-1439830031.

The term boosting refers to a family of algorithms that are able to convert weak learners to strong learners

- ^ Michael Kearns (1988); Thoughts on Hypothesis Boosting (页面存档备份,存于互联网档案馆), Unpublished manuscript (Machine Learning class project, December 1988)

- ^ Schapire, Robert E. The Strength of Weak Learnability (PDF). Machine Learning. 1990, 5 (2): 197–227 [2012-08-23]. CiteSeerX 10.1.1.20.723

. S2CID 53304535. doi:10.1007/bf00116037. (原始内容 (PDF)存档于2012-10-10).

. S2CID 53304535. doi:10.1007/bf00116037. (原始内容 (PDF)存档于2012-10-10).

- ^ Leo Breiman. Arcing classifier (with discussion and a rejoinder by the author). Ann. Stat. 1998, 26 (3): 801–849. doi:10.1214/aos/1024691079

.

. Schapire (1990) proved that boosting is possible. (Page 823)

- ^ Philip M. Long, Rocco A. Servedio, "Random Classification Noise Defeats All Convex Potential Boosters" (PDF). [2014-04-17]. (原始内容存档 (PDF)于2021-01-18).

其他參考資料

- Yoav Freund and Robert E. Schapire (1997); A Decision-Theoretic Generalization of On-line Learning and an Application to Boosting (页面存档备份,存于互联网档案馆), Journal of Computer and System Sciences, 55(1):119-139

- Robert E. Schapire and Yoram Singer (1999); Improved Boosting Algorithms Using Confidence-Rated Predictors (页面存档备份,存于互联网档案馆), Machine Learning, 37(3):297-336

外部链接

- Robert E. Schapire (2003); The Boosting Approach to Machine Learning: An Overview (页面存档备份,存于互联网档案馆), MSRI (Mathematical Sciences Research Institute) Workshop on Nonlinear Estimation and Classification

- An up-to-date collection of papers on boosting

|